High-Volume Dataset Integration for 910150008, 9481100096, 8445850488, 692192987, 649662314, 934396732

High-volume dataset integration poses significant challenges, particularly with identifiers such as 910150008 and 9481100096. Disparate data silos can hinder effective analysis and decision-making. Methodologies like data normalization and schema alignment are essential for overcoming these obstacles. However, ensuring data quality and consistency remains a complex endeavor. As organizations strive to unify these datasets, it is crucial to explore the strategies that can lead to successful integration outcomes.

Understanding the Challenges of High-Volume Dataset Integration

High-volume dataset integration presents a myriad of challenges that necessitate careful consideration and strategic planning.

Organizations often encounter data silos that hinder seamless access to critical information. The selection and implementation of appropriate integration tools become essential to bridge these gaps.

Navigating these complexities requires a methodical approach, ensuring that disparate datasets are cohesively unified while maintaining data integrity and accessibility for informed decision-making.

Key Methodologies for Effective Data Merging

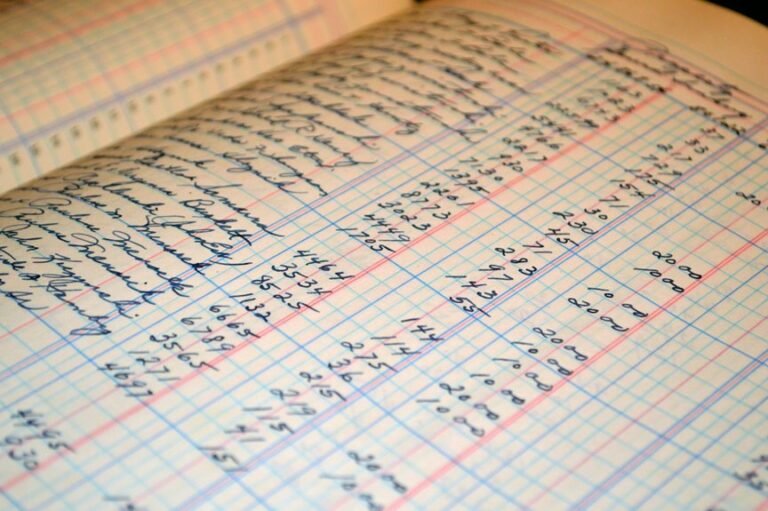

Effective data merging hinges on the application of robust methodologies that can systematically address the complexities of integrating diverse datasets.

Key strategies include data normalization, which standardizes formats across varied sources, and schema alignment, ensuring consistent structure and relationships within the data.

These methodologies facilitate seamless integration, enabling organizations to harness valuable insights from high-volume datasets while maintaining clarity and coherence.

Ensuring Data Quality and Consistency

Ensuring data quality and consistency is paramount for organizations that seek to derive meaningful insights from integrated datasets.

Rigorous data validation processes are essential to identify inaccuracies or discrepancies. Additionally, implementing consistency checks across various data sources guarantees that the information remains uniform and reliable.

Case Studies: Successful Integration Strategies

The successful integration of datasets often hinges on the strategies employed by organizations to address challenges related to data quality and consistency.

Effective data governance, the use of robust integration tools, and proactive stakeholder engagement are critical.

Additionally, addressing scalability issues and establishing clear performance metrics within project timelines ensures that integration efforts yield sustainable results, facilitating long-term data accessibility and utility.

Conclusion

In the intricate dance of high-volume dataset integration, organizations must navigate a labyrinth of challenges to uncover valuable insights. By employing robust methodologies such as data normalization and schema alignment, they can transform disparate datasets into a coherent narrative. Ultimately, the commitment to data quality and continuous governance serves as the compass guiding decision-makers through the fog of complexity, illuminating pathways to enhanced operational efficiency and strategic foresight.